Privacy statement: Your privacy is very important to Us. Our company promises not to disclose your personal information to any external company with out your explicit permission.

Abstract: An audio and video synchronization method based on RTP is designed for the H.323 video conference system. This method connects audio and video data through the same media channel under the premise of strictly abiding by the RTP protocol, so as to achieve lip and voice synchronization purpose. Experiments show that this method can realize the synchronous playback of audio and video well under the condition of little impact on the image quality, and has the advantages of simple implementation, no increase of system burden, etc., and has wide practicality.

In the H.323 video conference system, if the audio and video data collected at the sending end can be played simultaneously at the receiving end, the lip sound is considered to be synchronized. The audio and video data collected by the terminal must be synchronized. To ensure simultaneous playback, it is necessary to ensure that the audio and video consume the same time during the collection and playback processing. The characteristics of the IP network determine that the time consumed by the transmission of audio and video data through different channels cannot be exactly the same. Lip-voice synchronization is a major problem in video conferencing systems. If the playback time deviation of the simultaneously sampled audio and video data is within [-80ms, + 80ms], the user will basically not feel out of sync. Once [-160ms, + 160ms] is exceeded, the user can clearly feel that the middle part is critical range.

1 Introduction

1.1 Article Arrangement

Section 2 of this article analyzes the shortcomings of existing audio and video synchronization solutions. Section 3 describes in detail the implementation process of the scheme designed in this article. Section 4 gives experimental data and analysis results. Section 5 gives the conclusion.

1.2 Basic introduction

In the H.323 video conference system, in addition to the network environment, the cause of the audio and video out-of-sync phenomenon is the separate transmission of audio and video. Although H.323 recommends that audio and video be transmitted through different channels, the RTP [2, 3] protocol and the underlying UDP protocol that actually transmit data do not specify that a pair of connections can only transmit one of audio or video, through the same Channel transmission of audio and video is completely possible, and this can minimize the audio and video synchronization caused by network reasons. This paper gives the realization plan of this idea and verifies it.

2 Existing solutions

At present, the most commonly used lip synchronization methods can be divided into the following two categories from the perspective of thinking:

Idea One: The sending end stamps each RTP packet to be sent, and records their sampling time. The receiving end ensures the simultaneous playback of data sampled at the same time by adding delays. The implementation of this type of method requires a neutral third-party reference clock and the participation of SR [2, 3] of the RTCP protocol. If these two conditions are not met, synchronization loses its basis.

The second idea is that the lip-synchronization is essentially caused by the separate transmission and processing of audio and video in the H.323 video conference system. If a certain method is used to associate the audio and video information, the phenomenon of asynchrony can be effectively avoided. One implementation scheme is to embed audio into the video for transmission according to a certain correspondence, and the receiving end extracts audio data from the video and reconstructs it, so as to achieve the purpose of lip synchronization [4]. The implementation of this solution is more complicated, and the use of non-standard RTP implementation will bring difficulties to the intercommunication between H.323 products of different manufacturers.

3 A new audio and video synchronization method

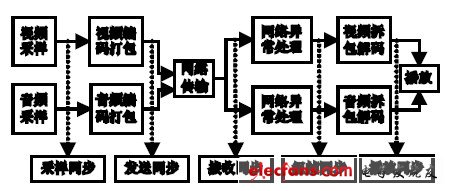

The basic idea of this method is: in the ten processing processes of sampling, encoding, packaging, sending, network transmission, receiving, network exception handling, unpacking, decoding, and playing of audio and video data, collecting, encoding, packaging, unpacking and The decoding time is basically fixed, and there will be no difference in delay due to the difference in network environment. The four processes of transmission, network transmission, reception, and network exception handling have greater randomness, and their processing time will vary with network performance. The difference is quite different, which in turn causes the audio and video to be out of sync during playback. Therefore, the focus of the lip synchronization process is to ensure the synchronization of audio and video in the four processes of transmission, network transmission, reception, and network abnormality processing, that is, the part between transmission synchronization and framing synchronization in Figure 1.

Figure 1 The whole process of lip synchronization

For the time difference caused by other processing, it is only necessary to add a fixed delay to the audio after the system is stable, because in general, the audio processing takes less time than the video processing, and the specific difference can be obtained through multiple experiments.

The RTP protocol stipulates that the payload type (PT) carried in each RTP packet is unique, but if the audio and video are transmitted through the same channel, and the audio and video frames collected at the same time are sequentially sent alternately, then both To ensure the synchronization of audio and video in transmission, it also complies with the RTP protocol. The amount of audio data is small, one RTP packet can carry one frame, and one video frame requires multiple RTP packets. The end of frame flag uses the Mark field in the RTP packet header. This field is 1, indicating that the current packet is a frame End the package.

According to the above ideas, the specific implementation process of the plan is designed as follows:

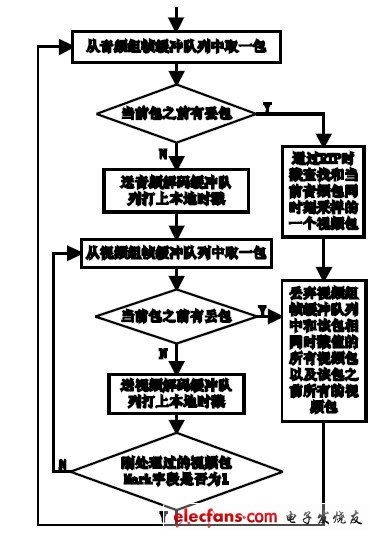

(1) The sender independently samples the audio and video information, framing and packaging, and then put them in their respective buffer queues for transmission (2) The data sending module fetches data from the sending buffer, 1) From the audio buffer queue Take a packet (one frame); 2) Take data from the video buffer queue. For every packet taken, determine whether the Mark field of the RTP packet header is 1, if it is 1, it means that the current video frame has been taken, go to 1), If the Mark field is 0, it means that the current video frame has not been taken, go to 2); (3) The audio and video data is sent to the network through the same channel; (4) The receiver receives the data and distinguishes the audio according to the PT field in the packet header Put the video into the respective receive buffer queue for network exception handling such as request packet retransmission, out-of-order reordering [5, 6], and then enter the framing buffer to wait for the decoder to take the data. The data entering the framing buffer is not Out-of-order packets and heavy packets, occasionally packet loss; (5) Audio and video unpacking and framing, the implementation process is shown in Figure 2:

Figure 2 Schematic diagram of frame synchronization.

(6) Audio and video are taken from their respective decoded buffer queues in order to be decoded. The local timestamp added to the audio and video data during the framing process is calibrated and played synchronously.

November 21, 2024

October 24, 2024

이 업체에게 이메일로 보내기

November 21, 2024

October 24, 2024

Privacy statement: Your privacy is very important to Us. Our company promises not to disclose your personal information to any external company with out your explicit permission.

Fill in more information so that we can get in touch with you faster

Privacy statement: Your privacy is very important to Us. Our company promises not to disclose your personal information to any external company with out your explicit permission.